How to implement caching in Node.js

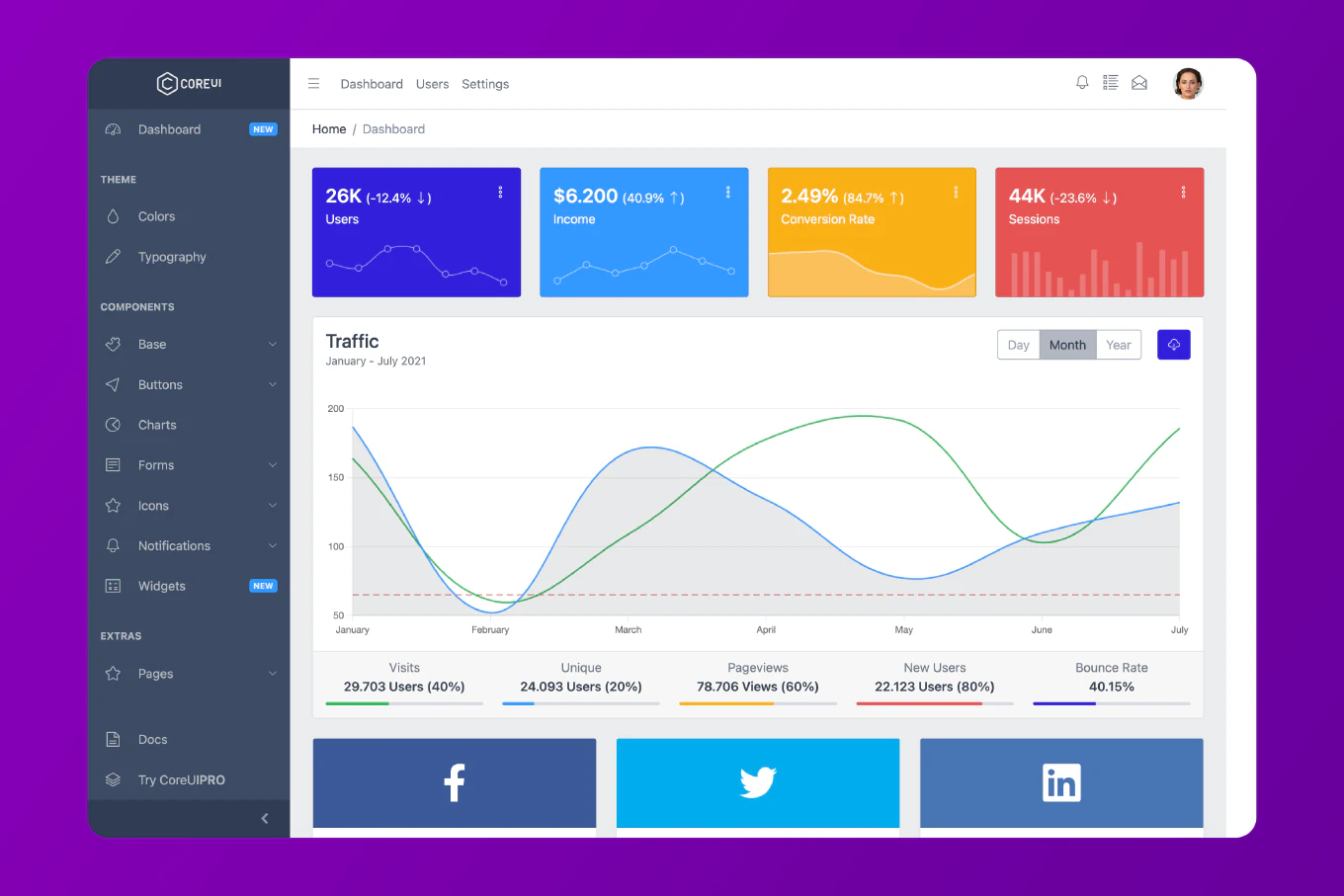

Caching improves application performance by storing frequently accessed data in memory, reducing database queries and external API calls for faster response times. As the creator of CoreUI, a widely used open-source UI library, I’ve implemented caching strategies in high-traffic Node.js applications throughout my 12 years of backend development since 2014. The most effective approach combines in-memory caching for simple use cases with Redis for distributed caching across multiple servers. This method provides flexible cache invalidation, TTL management, and scalability from single-server to distributed architectures without application code changes.

Implement in-memory cache with Map, create Redis client for distributed caching, build cache middleware for Express routes.

npm install redis ioredis express

// src/cache/memory-cache.js

class MemoryCache {

constructor() {

this.cache = new Map()

this.timers = new Map()

}

set(key, value, ttl = 0) {

this.cache.set(key, value)

if (ttl > 0) {

const existingTimer = this.timers.get(key)

if (existingTimer) {

clearTimeout(existingTimer)

}

const timer = setTimeout(() => {

this.delete(key)

}, ttl * 1000)

this.timers.set(key, timer)

}

return true

}

get(key) {

return this.cache.get(key)

}

has(key) {

return this.cache.has(key)

}

delete(key) {

const timer = this.timers.get(key)

if (timer) {

clearTimeout(timer)

this.timers.delete(key)

}

return this.cache.delete(key)

}

clear() {

this.timers.forEach(timer => clearTimeout(timer))

this.timers.clear()

this.cache.clear()

}

size() {

return this.cache.size

}

keys() {

return Array.from(this.cache.keys())

}

}

module.exports = new MemoryCache()

// src/cache/redis-cache.js

const Redis = require('ioredis')

class RedisCache {

constructor(config = {}) {

this.client = new Redis({

host: config.host || 'localhost',

port: config.port || 6379,

password: config.password,

db: config.db || 0,

retryStrategy: (times) => {

const delay = Math.min(times * 50, 2000)

return delay

}

})

this.client.on('error', (err) => {

console.error('Redis error:', err)

})

this.client.on('connect', () => {

console.log('Redis connected')

})

}

async set(key, value, ttl = 0) {

try {

const serialized = JSON.stringify(value)

if (ttl > 0) {

await this.client.setex(key, ttl, serialized)

} else {

await this.client.set(key, serialized)

}

return true

} catch (error) {

console.error('Redis set error:', error)

return false

}

}

async get(key) {

try {

const value = await this.client.get(key)

return value ? JSON.parse(value) : null

} catch (error) {

console.error('Redis get error:', error)

return null

}

}

async has(key) {

try {

const exists = await this.client.exists(key)

return exists === 1

} catch (error) {

console.error('Redis exists error:', error)

return false

}

}

async delete(key) {

try {

await this.client.del(key)

return true

} catch (error) {

console.error('Redis delete error:', error)

return false

}

}

async clear(pattern = '*') {

try {

const keys = await this.client.keys(pattern)

if (keys.length > 0) {

await this.client.del(...keys)

}

return true

} catch (error) {

console.error('Redis clear error:', error)

return false

}

}

async increment(key, amount = 1) {

try {

return await this.client.incrby(key, amount)

} catch (error) {

console.error('Redis increment error:', error)

return null

}

}

async expire(key, ttl) {

try {

await this.client.expire(key, ttl)

return true

} catch (error) {

console.error('Redis expire error:', error)

return false

}

}

disconnect() {

this.client.disconnect()

}

}

module.exports = RedisCache

// src/cache/cache-manager.js

const memoryCache = require('./memory-cache')

const RedisCache = require('./redis-cache')

class CacheManager {

constructor(type = 'memory', config = {}) {

if (type === 'redis') {

this.cache = new RedisCache(config)

} else {

this.cache = memoryCache

}

}

async get(key) {

return await this.cache.get(key)

}

async set(key, value, ttl) {

return await this.cache.set(key, value, ttl)

}

async has(key) {

return await this.cache.has(key)

}

async delete(key) {

return await this.cache.delete(key)

}

async clear(pattern) {

return await this.cache.clear(pattern)

}

async wrap(key, fn, ttl = 300) {

const cached = await this.get(key)

if (cached !== null && cached !== undefined) {

return cached

}

const result = await fn()

await this.set(key, result, ttl)

return result

}

generateKey(...parts) {

return parts.filter(Boolean).join(':')

}

}

module.exports = CacheManager

// src/middleware/cache-middleware.js

function cacheMiddleware(cacheManager, options = {}) {

return async (req, res, next) => {

if (req.method !== 'GET') {

return next()

}

const {

ttl = 300,

keyPrefix = 'cache',

skipCache = false

} = options

if (skipCache || req.query.nocache) {

return next()

}

const cacheKey = cacheManager.generateKey(

keyPrefix,

req.originalUrl || req.url

)

try {

const cachedResponse = await cacheManager.get(cacheKey)

if (cachedResponse) {

return res.json(cachedResponse)

}

const originalJson = res.json.bind(res)

res.json = (body) => {

cacheManager.set(cacheKey, body, ttl).catch(err => {

console.error('Cache set error:', err)

})

return originalJson(body)

}

next()

} catch (error) {

console.error('Cache middleware error:', error)

next()

}

}

}

module.exports = cacheMiddleware

// src/server.js

const express = require('express')

const CacheManager = require('./cache/cache-manager')

const cacheMiddleware = require('./middleware/cache-middleware')

const app = express()

const cache = new CacheManager('redis', {

host: 'localhost',

port: 6379

})

app.get('/api/users',

cacheMiddleware(cache, { ttl: 300, keyPrefix: 'users' }),

async (req, res) => {

const users = await fetchUsersFromDatabase()

res.json(users)

}

)

app.get('/api/user/:id', async (req, res) => {

const userId = req.params.id

const cacheKey = cache.generateKey('user', userId)

const cachedUser = await cache.get(cacheKey)

if (cachedUser) {

return res.json(cachedUser)

}

const user = await fetchUserFromDatabase(userId)

await cache.set(cacheKey, user, 600)

res.json(user)

})

app.get('/api/stats', async (req, res) => {

const stats = await cache.wrap(

'stats:daily',

async () => {

return await calculateExpensiveStats()

},

3600

)

res.json(stats)

})

app.delete('/api/user/:id', async (req, res) => {

const userId = req.params.id

await deleteUserFromDatabase(userId)

await cache.delete(cache.generateKey('user', userId))

await cache.clear('users:*')

res.json({ success: true })

})

async function fetchUsersFromDatabase() {

return [

{ id: 1, name: 'John' },

{ id: 2, name: 'Jane' }

]

}

async function fetchUserFromDatabase(id) {

return { id, name: 'John' }

}

async function deleteUserFromDatabase(id) {

console.log(`Deleting user ${id}`)

}

async function calculateExpensiveStats() {

return { total: 1000, active: 750 }

}

app.listen(3000, () => {

console.log('Server running on port 3000')

})

Here the MemoryCache class implements simple in-memory caching with Map for single-server applications. The TTL implementation uses setTimeout to automatically delete expired entries. The RedisCache class provides distributed caching using ioredis client for multi-server deployments. The JSON serialization handles complex object caching in Redis string values. The CacheManager abstraction allows switching between memory and Redis without changing application code. The wrap method implements cache-aside pattern fetching from cache or executing function and caching result. The cacheMiddleware intercepts GET requests, checks cache, and stores responses automatically. The cache key generation using URL ensures unique keys per endpoint and parameters.

Best Practice Note:

This is the caching strategy we use in CoreUI backend services for optimizing API performance and reducing database load. Implement cache invalidation strategies that match your data update patterns, use cache warming for predictable traffic patterns to avoid cache stampede, monitor cache hit rates and adjust TTL values based on actual usage patterns, and implement circuit breakers to prevent cascading failures when cache service is unavailable.