How to load balance Node.js apps

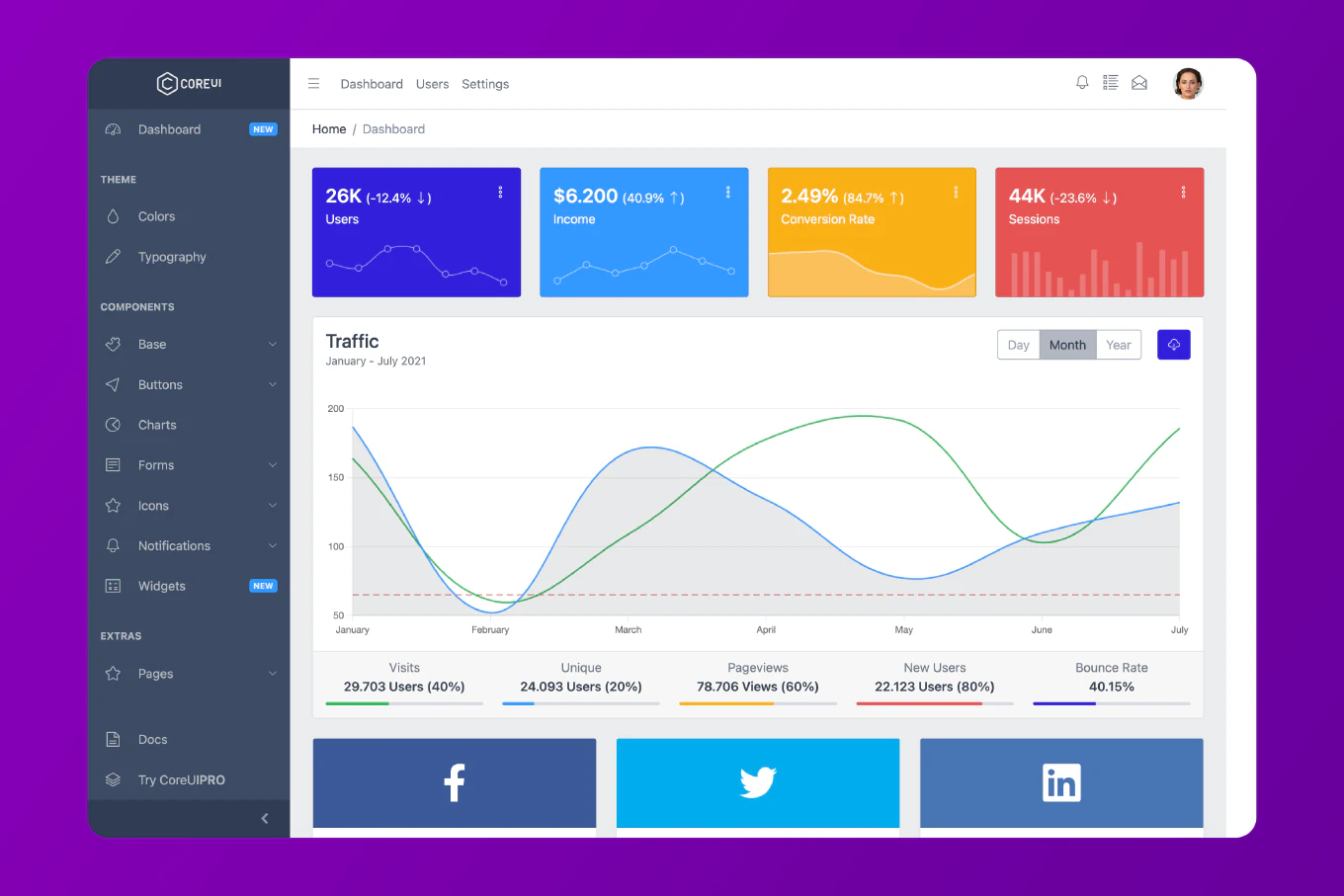

Single Node.js instances have throughput limits, and production applications require multiple instances for high availability and horizontal scaling. As the creator of CoreUI with over 12 years of Node.js experience since 2014, I’ve architected load-balanced systems serving millions of requests daily. Load balancing distributes incoming traffic across multiple Node.js processes or servers, improving performance and providing redundancy if instances fail. The most common approach uses Nginx as a reverse proxy to balance requests across multiple Node.js instances.

Use Nginx as a reverse proxy to load balance requests across multiple Node.js application instances.

Install Nginx and configure load balancing in /etc/nginx/nginx.conf:

http {

upstream nodejs_backend {

least_conn; # or round_robin, ip_hash

server localhost:3000;

server localhost:3001;

server localhost:3002;

server localhost:3003;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://nodejs_backend;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_cache_bypass $http_upgrade;

}

}

}

Start multiple Node.js instances on different ports:

const express = require('express')

const app = express()

const PORT = process.env.PORT || 3000

app.get('/', (req, res) => {

res.json({

message: 'Hello from server',

port: PORT,

pid: process.pid

})

})

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`)

})

Start instances:

PORT=3000 node app.js &

PORT=3001 node app.js &

PORT=3002 node app.js &

PORT=3003 node app.js &

Best Practice Note

Nginx load balancing strategies include round_robin (default, equal distribution), least_conn (routes to server with fewest active connections), and ip_hash (same client always routes to same server for session persistence). For session management, use Redis instead of in-memory sessions since requests may hit different servers. Enable health checks to automatically remove failed instances from the pool. For cloud deployments, use managed load balancers like AWS ALB or Google Cloud Load Balancer. This is how we deploy CoreUI enterprise backends—Nginx load balancing across multiple PM2-managed Node.js clusters for maximum availability and throughput.