How to monitor Node.js performance

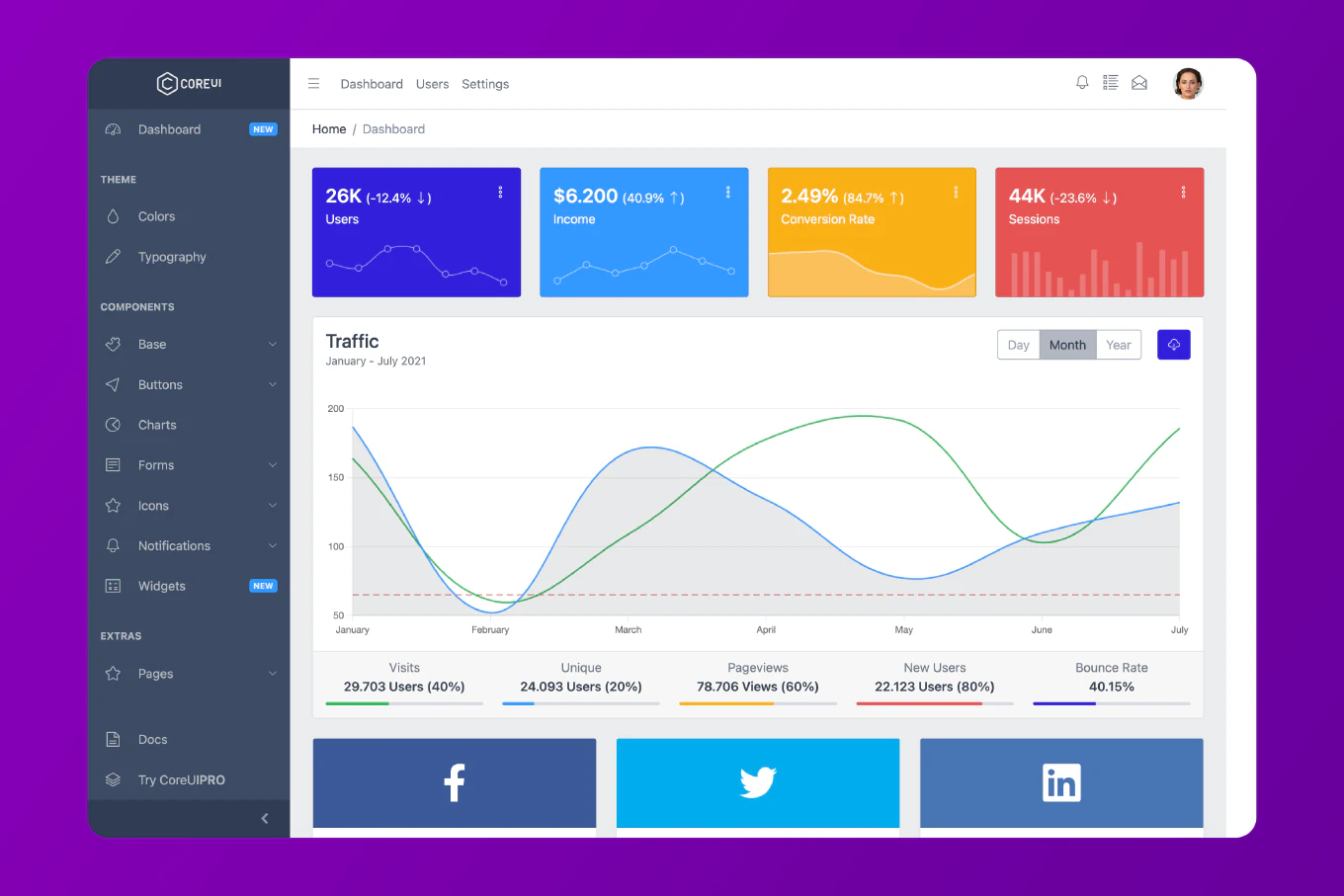

Performance monitoring enables early detection of bottlenecks, memory leaks, and degraded responsiveness in Node.js applications before they impact users. As the creator of CoreUI, a widely used open-source UI library, I’ve implemented performance monitoring in production Node.js services throughout my 11 years of backend development. The most effective approach is using Node.js built-in performance hooks and process metrics to track key indicators. This method provides real-time visibility into application health without external dependencies and minimal performance overhead.

Use performance hooks to measure operations, track process memory and CPU metrics, and monitor event loop lag.

const { performance, PerformanceObserver } = require('perf_hooks')

// Monitor event loop lag

let lastCheck = Date.now()

setInterval(() => {

const now = Date.now()

const lag = now - lastCheck - 1000

if (lag > 100) {

console.warn(`Event loop lag: ${lag}ms`)

}

lastCheck = now

}, 1000)

// Monitor memory usage

setInterval(() => {

const memUsage = process.memoryUsage()

console.log({

rss: `${Math.round(memUsage.rss / 1024 / 1024)}MB`,

heapUsed: `${Math.round(memUsage.heapUsed / 1024 / 1024)}MB`,

heapTotal: `${Math.round(memUsage.heapTotal / 1024 / 1024)}MB`,

external: `${Math.round(memUsage.external / 1024 / 1024)}MB`

})

}, 30000)

// Monitor operation performance

const obs = new PerformanceObserver((items) => {

items.getEntries().forEach((entry) => {

console.log(`${entry.name}: ${entry.duration.toFixed(2)}ms`)

if (entry.duration > 1000) {

console.warn(`Slow operation detected: ${entry.name}`)

}

})

})

obs.observe({ entryTypes: ['measure'] })

// Example: Measure database query

async function fetchUsers() {

performance.mark('fetchUsers-start')

// Simulate database query

await new Promise(resolve => setTimeout(resolve, 500))

const users = [{ id: 1, name: 'John' }]

performance.mark('fetchUsers-end')

performance.measure('fetchUsers', 'fetchUsers-start', 'fetchUsers-end')

return users

}

// Monitor CPU usage

let lastCpuUsage = process.cpuUsage()

setInterval(() => {

const currentCpuUsage = process.cpuUsage(lastCpuUsage)

const userCPU = currentCpuUsage.user / 1000

const systemCPU = currentCpuUsage.system / 1000

console.log({

userCPU: `${userCPU.toFixed(2)}ms`,

systemCPU: `${systemCPU.toFixed(2)}ms`

})

lastCpuUsage = process.cpuUsage()

}, 10000)

// Health check endpoint

const express = require('express')

const app = express()

app.get('/health', (req, res) => {

const memUsage = process.memoryUsage()

const uptime = process.uptime()

res.json({

status: 'healthy',

uptime: `${Math.floor(uptime)}s`,

memory: {

heapUsed: `${Math.round(memUsage.heapUsed / 1024 / 1024)}MB`,

heapTotal: `${Math.round(memUsage.heapTotal / 1024 / 1024)}MB`

}

})

})

app.listen(3000)

Here the event loop lag monitor detects blocking operations by measuring actual elapsed time versus expected interval duration. Memory monitoring with process.memoryUsage() tracks RSS (resident set size), heap usage, and external memory every 30 seconds. PerformanceObserver watches for performance measurements and logs operations exceeding 1000ms as slow. The performance.mark and performance.measure API tracks specific operation durations like database queries. CPU usage monitoring with process.cpuUsage() reports user and system CPU time consumption. The health endpoint exposes key metrics via HTTP for external monitoring tools.

Best Practice Note:

This is the performance monitoring foundation we use in CoreUI Node.js backend services before integrating comprehensive APM solutions for production. Integrate with APM tools like New Relic or Datadog for advanced monitoring with alerting and historical analysis, use clinic.js for deep performance profiling and flame graphs during development, and implement custom metrics with libraries like prom-client for Prometheus integration in Kubernetes environments.