How to compress files in Node.js with zlib

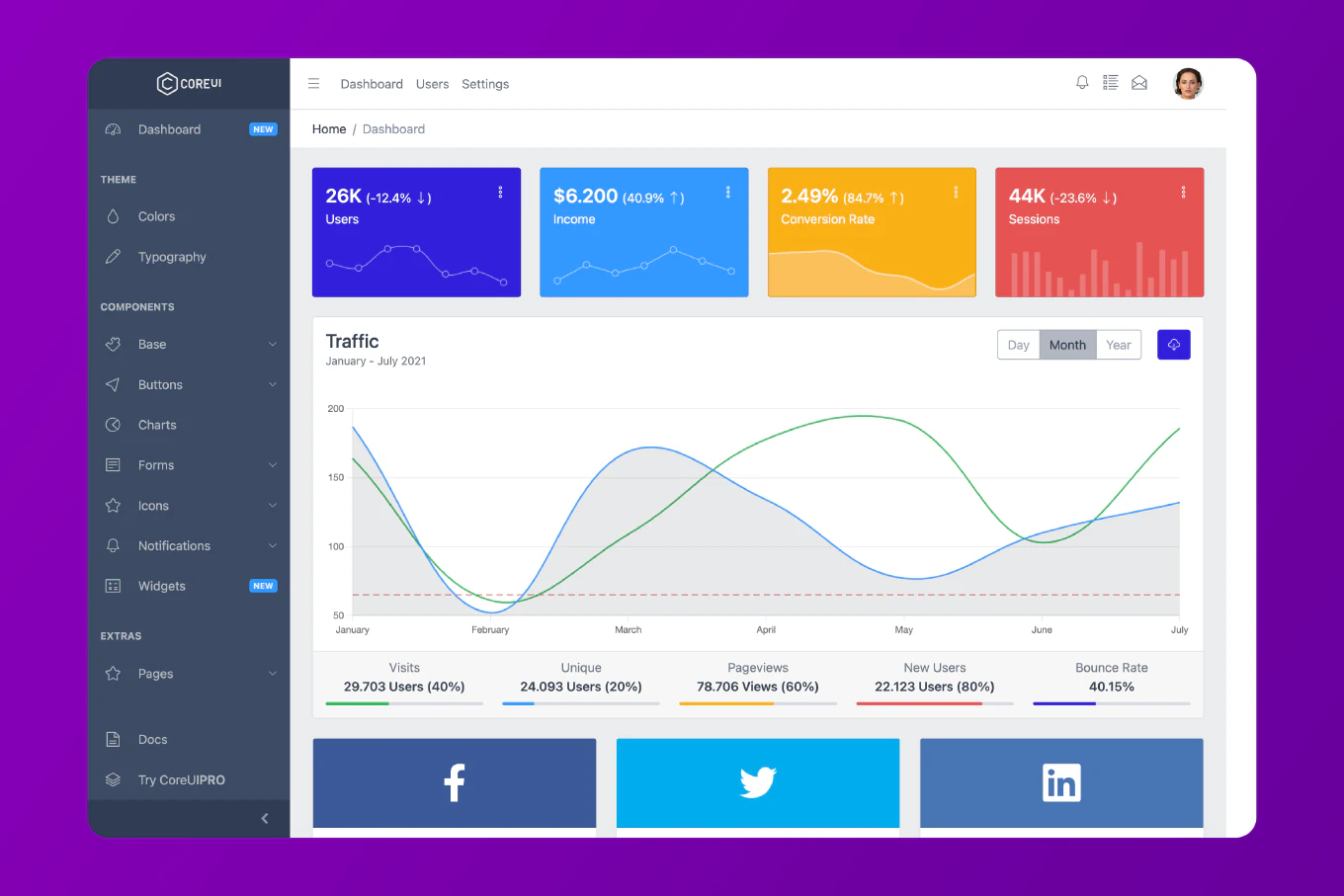

Compressing files in Node.js with zlib reduces file sizes for storage optimization, bandwidth efficiency, and improved application performance. With over 25 years of experience in software development and as the creator of CoreUI, I’ve implemented file compression extensively in data archival systems, web optimization, and storage management applications. From my expertise, the most effective approach is using zlib compression streams with proper error handling and pipeline management for robust file processing. This pattern enables efficient compression of large files without overwhelming system memory or blocking the event loop.

Use zlib compression streams with pipeline for memory-efficient file compression and proper error handling.

const fs = require('fs')

const zlib = require('zlib')

const { pipeline } = require('stream')

const path = require('path')

function compressFile(inputFile, outputFile) {

return new Promise((resolve, reject) => {

const readStream = fs.createReadStream(inputFile)

const gzipStream = zlib.createGzip()

const writeStream = fs.createWriteStream(outputFile)

pipeline(

readStream,

gzipStream,

writeStream,

(error) => {

if (error) {

console.error('Compression failed:', error.message)

reject(error)

} else {

console.log('File compressed successfully')

resolve()

}

}

)

})

}

// Usage

async function compressFiles() {

try {

await compressFile('large-file.txt', 'large-file.txt.gz')

// Get compression ratio

const originalSize = fs.statSync('large-file.txt').size

const compressedSize = fs.statSync('large-file.txt.gz').size

const ratio = ((1 - compressedSize / originalSize) * 100).toFixed(2)

console.log(`Compression ratio: ${ratio}%`)

} catch (error) {

console.error('Compression error:', error.message)

}

}

compressFiles()

Here zlib.createGzip() creates a compression stream that transforms data using gzip algorithm. The pipeline() function safely connects the read stream, compression stream, and write stream with automatic error handling and resource cleanup. The Promise wrapper enables async/await usage for better control flow. File size comparison shows compression effectiveness.

Best Practice Note:

This is the same approach we use in CoreUI backend systems for log file compression, asset optimization, and data archival processes in production environments. Consider implementing compression level options and file type detection to optimize compression for different content types and use cases.