How to use streams in Node.js

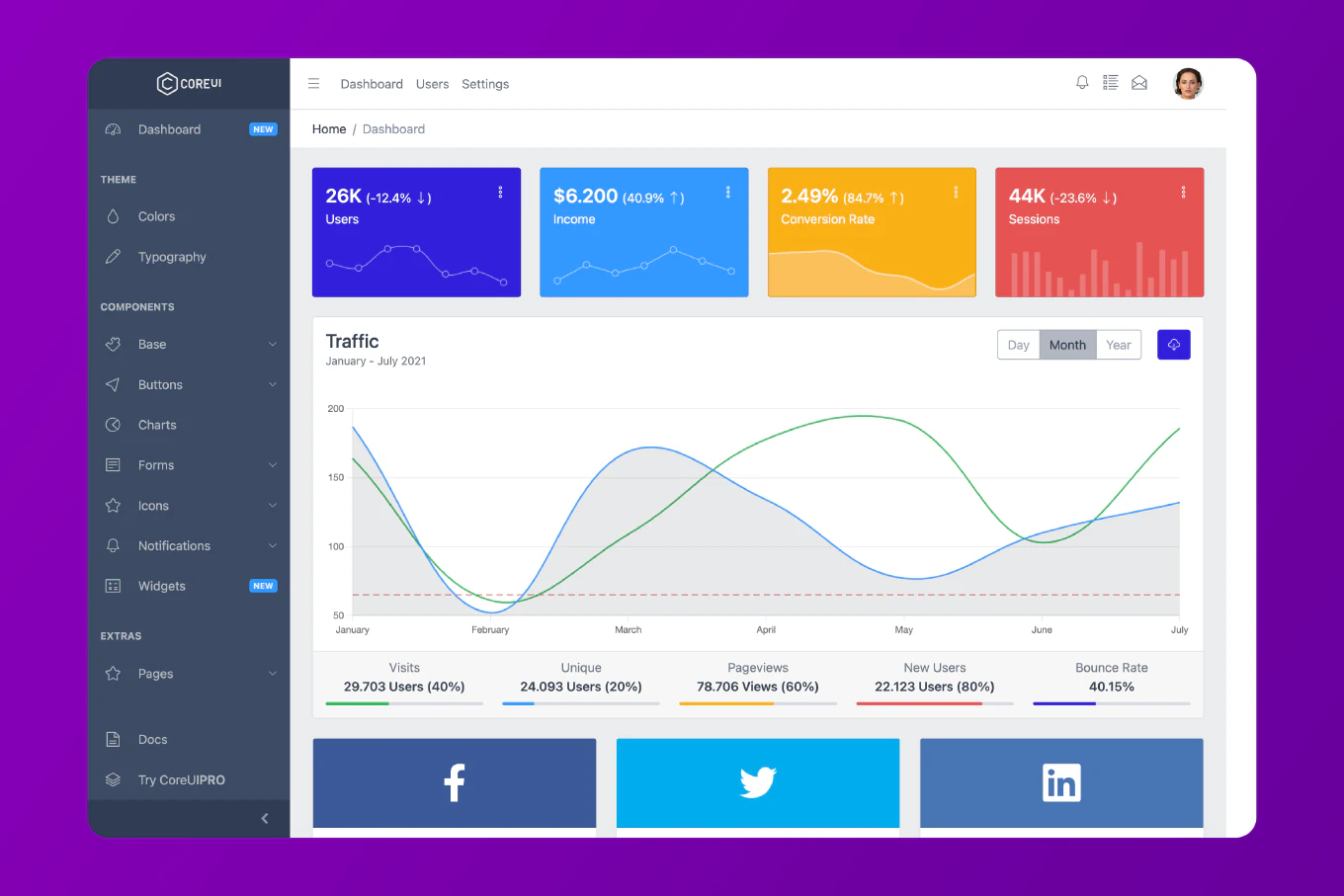

Using streams in Node.js enables efficient processing of large amounts of data without loading everything into memory, making applications more performant and scalable. As the creator of CoreUI with over 25 years of software development experience, I’ve implemented streams extensively in data processing pipelines, file operations, and real-time applications. From my expertise, the most effective approach is understanding the four types of streams: readable, writable, duplex, and transform streams. This pattern enables memory-efficient data processing for applications handling large files, real-time data, or high-throughput operations.

Use Node.js streams to process data efficiently without loading large amounts into memory at once.

const fs = require('fs')

const { Transform } = require('stream')

const readStream = fs.createReadStream('large-file.txt')

const writeStream = fs.createWriteStream('output.txt')

const upperCaseTransform = new Transform({

transform(chunk, encoding, callback) {

this.push(chunk.toString().toUpperCase())

callback()

}

})

readStream.pipe(upperCaseTransform).pipe(writeStream)

Here fs.createReadStream() creates a readable stream from a file, fs.createWriteStream() creates a writable stream for output, and Transform creates a custom transform stream that converts text to uppercase. The pipe() method connects streams together, automatically handling data flow, backpressure, and error propagation. This approach processes files of any size using minimal memory by reading data in small chunks.

Best Practice Note:

This is the same approach we use in CoreUI backend services for processing large CSV uploads, log file analysis, and data transformation pipelines. Always handle stream errors and use pipeline() for more robust error handling in production applications with multiple streams.